The Prompt Engineering Tax: Why Ultra-Fast Small LLMs Can Be Slower and Costlier in Practice

In this article:

- LLM Model speed is highly circumstantial

- LLM model selection and prompt engineering

- The use case for “fast” models

- The conundrum with small ~8b models

- Gemini Flash vs. Llama 3.2 8b

- Speed vs Quality vs Product Velocity

- My Advice

The Lure Of Speed

LLM platform providers use “tokens per second” and speed scores in their primary marketing messaging. This is true for closed providers like Google / Gemini, OpenAI, and Anthropic; as well as open-source providers like together AI, Groq, Cerebras, and Fireworks AI.

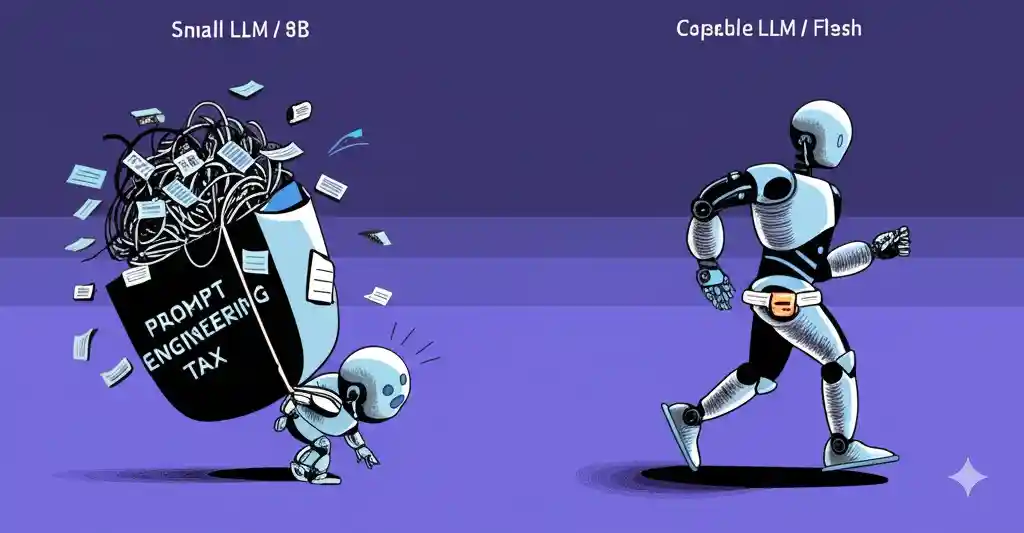

AI engineers are constantly aware of a speed vs quality battle. Generally, larger and smarter models are slower. Smaller and dumber models are faster. However, real-world prompt engineering blurs these lines. Larger models may require simpler prompts and less context. Smaller models may require several-shot input + output example cases.

This article how to objectively measure speed against quality, and why that flashy new fast model may not actually be faster in the real world for your use case.

Required: Eval-Driven Development

Eval-driven development (test-driven prompt engineering) is the practice of first establishing LLM-based evaluations (tests) that an LLM’s outputs must satisfy, and then developing a prompt that can pass those evaluations.

This is similar to Test Driven Development (TDD) in software engineering. However, while TDD is circumstantially useful in software development. Eval-driven development is absolutely required for all AI engineering.

This article is not about eval-drive development. It assumes you will utilize the practice to objectively measure the speed of your AI while maintaining a standard of quality.

Example AI Engineering Scenario

You’re building an AI agent to analyze customer feedback. This agent needs to:

- Understand the sentiment: Is the feedback positive, negative, neutral, or spam?

- Summarize: Create a one sentence summary for your team.

- Created structure data: Convert the feedback into JSON for your database.

- Route the feedback: Send to customer support, product development, or sales.

This workflow involves multiple decision and data extraction points. Each step is relatively simple, but together represent a larger challenge.

Engineering Decisions

- Can you get away with a fast 8b model?

- Do you need something more sophisticated like GPT-4o, Gemini Pro, or Llama 90b?

- Can this be accomplished with a single prompt?

- Does this need to be broken down into multiple prompts?

- Can they be run concurrently?

Route the feedback is a step that is highly unique to the business, teams involved, and the context of an organization. Let’s assume that the AI Engineer has found that this should be an isolated step determined with its own prompt.

In order to determine the routing correctly, the AI must:

- Be aware of each each team’s function (provided context).

- Analyze the support message for relevance to the possible destination parties.

- Pass 100% of our evaluators (sample inputs and expected outputs).

- An ASSERT statement that looks at the route destination selected by the LLM for a given input.

Traditional Software Engineering vs. AI Engineering

Traditional software development optimizes for faster and cheaper. Highly skilled software engineers flex by making code run faster with fewer resources and lower costs. However, traditional software development is deterministic.

AI engineering is probabilistic. Quality cannot necessarily be achieved with increases in speed and decreases in cost. Response quality must be established up-front with evaluators. Speed and cost improvements can be attempted. But it’s not always straight forward.

Prompt Engineering: Llama 3.2 8b vs Gemini Flash

On paper, the comparison looks like this:

| Model | Llama 3.2 8b | Gemini Flash |

|---|---|---|

| Speed | Blazing Fast | Fast |

| Cost | Practically Free | Affordable |

| Size | 8b (tiny) | 32b (estimated) |

In the real-world, prompt engineering reveals blurred lines.

Prompt Engineering: Llama 3.2 8b

The AI engineer spends 2 hours prompt engineering with Llama 3.2 8b attempting to reach 100% test passing on the quality evaluators. There are several example input test cases that are failing.

To achieve a 100% passing rate, the engineer adds multi-shot examples to the prompt. Example:

- [prompt text here]

- [examples]

- example input 1

- example response 1

- example input 2

- example response 2

- example input 3

- example response 3

After incorporating a dozen examples into the Llama 3.2 8b prompt (and at the risk of over-fitting), it is finally passing 100% of test cases.

The cost of this?

- Longer prompts: More input tokens, higher cost.

- Increased latency: Processing larger prompts takes more time.

- Over-fitting risk: Multi-shot prompts can become too tailored to the examples, hindering generalization and corner-case handling.

- More testing time: While testing output quality, the more elaborate your prompt becomes, the more time you are spending on a single AI agent tool’s development when you could be done and working on the next one.

Prompt Engineering: Gemini Flash

The AI Engineer spends 30 minutes prompt engineering Gemini Flash to reach 100% rest passing on the quality evaluators. To achieve a 100% passing rate, no examples are required.

Prompt Example:

- [prompt text here]

- (no examples)

Smarter models like Gemini Flash, while on-paper are more expensive per token and slower, can often achieve the same speed, cost, and quality outcome with shorter, zero-shot prompts.

Model Clarification:

This article discusses using the Gemini 1.5 Flash (estimated to be 32B size), not the Gemini Flash-8B small model.

API model code: models/gemini-1.5-flash

This is to create a comparison between perceived ultra-fast small models like llama 3.2 8b on groq, and larger more expensive “fast” models like Gemini Flash 1.5 large, and GPT-4o mini.

The Tiny Fast Model Conundrum:

Tiny models with high benchmark scores might seem like a panacea to AI speed and cost issues. Your leadership team may see this marketing data and direct the engineering team to swap to these new models. Assuming there is an eval-driven development practice in place, it’s highly likely that the need for more complex prompts can negate claimed advantages, resulting in:

- Unexpected costs: More input tokens eat into cost savings.

- Slower performance: Longer prompts increase processing time.

- Development overhead: Engineering and testing complex prompts is time-consuming.

Real-World Testing:

In my testing I compared Gemini Flash against Llama 3.2 (3B and 8B) on Groq…

Gemini Flash started at a disadvantage. Being a larger fast model, Gemini Flash 1.5 consistently required 500 - 600ms to respond. However, the prompt was simple, zero shot, and scored 10/10 on my input scenarios. I spent about 30 minutes putting together the AI Agent tool and it was working great.

My concern was that chaining ten or more 500ms workflow steps creates a slow response for the user. I wanted something faster for my UX. So I looked to Groq and smaller Llama models for a speed solution.

Groq started with a huge speed advantage. In my initial testing, Groq + llama 3.2 8b could respond in under 100ms (sometimes under 20ms!!!) with great results.

However, after hours of testing to get a perfect score on my evaluators, I had created an extensive multi-shot prompt that was potentially over-fit.

With this large multi-shot prompt and perfect score, Groq + llama 8b now consistently took 500 - 600ms to respond. Much slower than the initial 100ms results. This was now the same speed and cost of Gemini Flash.

I spent 2 hours doing prompt engineering for the tool to work as well with llama 3.2 8b for the same speed, cost, and quality outcome. I could have built out multiple tools in the same time period with Gemini Flash.

AI Engineering is different

Speed is everything, right? In a world where app UX frames per second are scrutinized over, and web loading speeds over 3 seconds are an abomination, it’s counter-intuitive to lean into slower LLM models.

- You are building AI.

- Your users expect intelligence.

- Poor quality causes churn.

- Poor speed is accepted.

My experience building AI products and talking to customers of AI products has taught me that response quality is paramount. There is no amount of speed gain that can make up for poor quality responses. You should always lean into higher quality.

There are diminishing returns. A 3 second response may 99% as good a 15 second response. Use your judgement. It depends on the task at hand. The experience should be as good as it can be without a material sacrifice in intelligence.

LLMs will get faster

LLMs will get better, cheaper, and faster every 3-6 months

Spending time optimizing your AI Agent tools and workflows for incremental speed gains today is a waste. In 6 months, a similar quality model will have a faster, cheaper option. Slowing your product development by 50% or more for a modest speed gain isn’t worth it. Iterate on your product faster with the highest quality possible. The speed gains will come when new models arrive.

8b models are highly capable of many simple tasks. My goal is not to discredit them entirely. In my opinion, if you’re struggling to prompt engineer with them at all, just move to a larger model or re-evaluate if the task should be broken down into separate steps.

Focus on quality

Bad responses will cause users to lose trust in your product. It will make them dislike your product. They will probably badmouth it to others or leave a bad review.

Focus on quality and your team’s development velocity. Build your product faster, build a higher quality product, and let the speed boost come automatically every 3-6 months when new models and new faster llm services are available.

A bad review because of slow speed is much better than a bad review because of poor quality. People save so much time compared to doing a task manually that the extra seconds of waiting are worth putting up with. But if the quality is poor, they will move onto the next product that does what they want.

What’s Your Nano-Model Use Case?

Have you successfully used a nano model for an AI Agent workflow tool that wasn’t easily achieved with a heuristic code flow or set of regular expressions?