AI Denialists Are Literally Killing Your Company

2026 isn’t just another year of AI progress. It’s an inflection point where organizational AI culture and belief systems will have real impact on your company’s ability to compete.

Right now, there are opportunities to 10x worker productivity compared to early 2025. AI systems like Claude Code and Claude Cowork, with Opus 4.5 unlock multi-agent delegation with git-worktrees. A single developer can delegate long-running, 20+ minute AI tasks to coding agents, tending to each one as it needs input.

The belief systems people are creating for themselves around AI, and what it means to use AI is having real impact on worker and business viability.

On November 24, 2025, Anthropic dropped Opus 4.5 for Claude Code. Overnight, developers went from babysitting single functions AI outputs to running complex tasks with multi-file changes.

This change is coming for all knowledge work. 2026 will be a cataclysm.

Garden of AI Delights

Inspired by Hieronymus Bosch: Garden of Earthly Delights

Reception of AI tools has not been rational. People are refusing to believe AI is real. Many experiment with it in a self-sabotaging way to reinforce their bias against it. They are doing this publicly, on X and LinkedIn. They convince themselves their customers want “hand made” code, emails, content. As if they’ll inspect the git commits for signs of human suffering.

Anti-AI Doomers Are Organized

Political leaders and media personalities across the political spectrum have talking points, message maps, PR campaigns, and media tours with a major narrative that AI is bad.

Bernie Sanders released a Senate report late 2025 warning AI could eliminate 100 million U.S. jobs. He’s pitching a “robot tax” and a 32-hour workweek.

“The same handful of oligarchs who have rigged our economy for decades are now moving as fast as they can to replace human workers with what they call ‘artificial labor.’ ” - Bernie Sanders

AOC is warning of “2008-style threats to economic stability” from an AI bubble.

On January 14, 2026, Bloomberg reported that Ron Desantis is “ratcheting up a campaign against the potential dangers of artificial intelligence and data centers.”

Steve Bannon’s War Room ran a full episode on January 8, 2026, about “AI DOOM”.

“AI has spread out across the world, infecting brains like algorithmic prions, giving the sense that perhaps the entire human race is under threat of getting digital mad cow disease.” — Joe Allen (Bannon’s editor)

“Water usage”, energy costs, “AI is evil”, and “AI robs intellectual property” style talking points have become mainstream takes. Politicians have learned they can build constituencies around AI fear. Doomer algorithms served by Meta and Bluesky are happy to serve that content on an infinite loop.

This rhetoric is poisonous to your company and employees. It gives people a moral device to protect their ego when faced with a threat. They’re not “falling behind”, they’re “taking a stand.” They’re on “the right side of history.”

Companies MUST enforce a strong pro-AI opinion, and have a strategy for training and team alignment to break through that discourse.

A Shrinking Group of Optimists

The Anti-AI movement is growing, surprising early adopters and tech optimists.

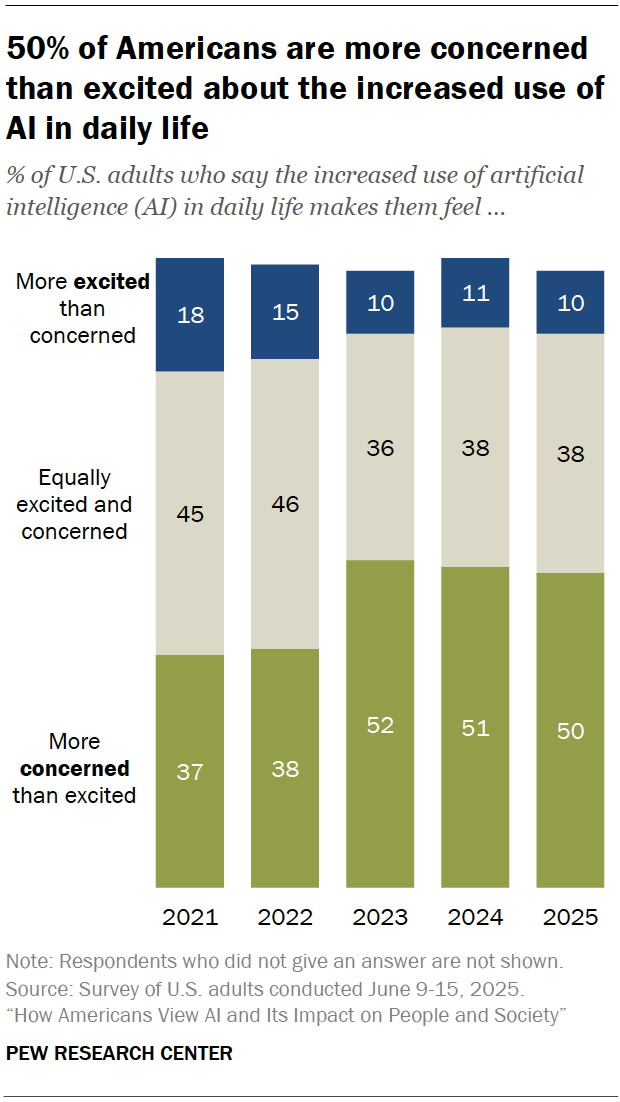

A September 2025 Pew Research study showed that only 10% of Americans are more excited than concerned about AI.

AI gets better, public sentiment gets worse.

- 53% believe AI will worsen people’s ability to think creatively.

- 50% believe it will worsen their ability to form meaningful relationships.

Real Sentiment Divergence

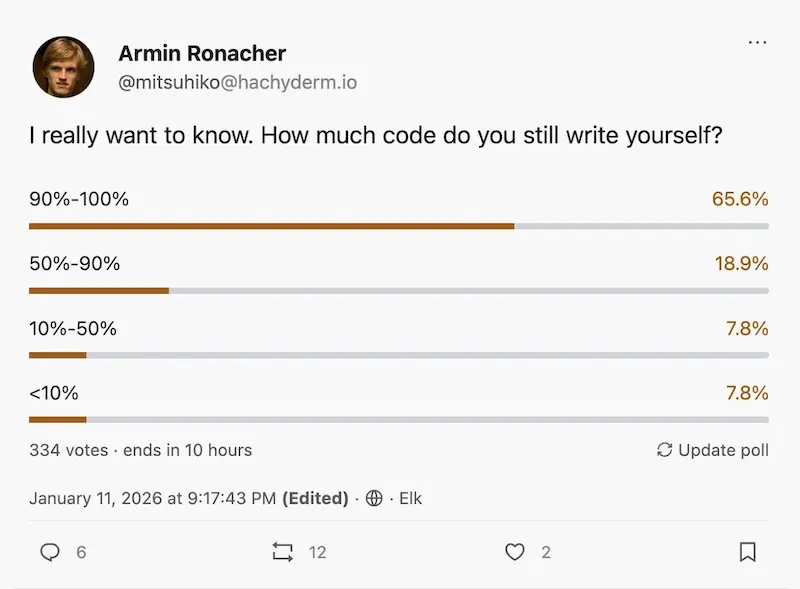

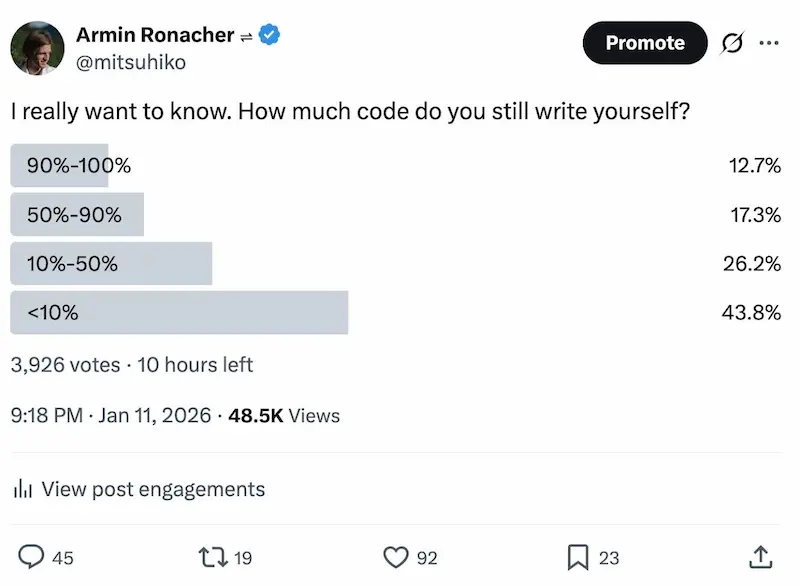

On January 11 2026, Armin Ronacher, creator of Flask, asked the same question to two different sets of people. Twitter / X users and Mastodon users.

I really want to know. How much code do you still write yourself?

The differences are stark.

| Platform | 90-100% manual | <10% manual |

|---|---|---|

| Mastodon | 65.6% | 7.8% |

| X/Twitter | 12.7% | 43.8% |

If you’re building an AI company, genuine belief that AI can help people is a rare asset.

People choose how they feel about AI. That choice reveals openness to change, tolerance for uncertainty, willingness to learn new ways of working. When hiring, you’re evaluating this alongside skills.

Productivity Impact Is Real

- Google’s 2025 DORA report:

- 90% of developers now use AI tools.

- Over 80% report enhanced productivity.

- Menlo Ventures State of AI

- 50% of developers use AI coding tools daily—65% in top-quartile organizations—reporting 15%+ velocity gains across the development lifecycle.

- Enterprise AI spend hit $37 billion in 2025, up from $11.5 billion in 2024. 3.2x in one year.

Andrej Karpathy, December 26, 2025: “I’ve never felt this much behind as a programmer. The profession is being dramatically refactored… I have a sense that I could be 10X more powerful if I just properly string together what has become available over the last year, and a failure to claim the boost feels decidedly like skill issue.”

However, gains from AI don’t automatically scale from individual to organization. Faros AI research found that uneven adoption and process mismatches are a roadblock to value. Meaning cultural conflict on “how things are done,” and not having company alignment and opinions on how to use AI, prevents organizations from actually accelerating.

The Team Culture Imperative

High performance software teams have alignment on how they approach problems and do work. Shared conventions. Shared quality standards. Shared opinions about architecture and patterns.

People who are against AI, or even just not optimistic about it, hold the entire team back. Their hesitation becomes team friction. They are refusing to learn a skill and adapt, they create discord and conflict on the team.

Notch is an AI denialist. He refuses to allow himself see the obvious and real impact AI coding agents are having. He has 3.2 million followers.

many such cases.

Team AI Mindset

To build an AI product, your team must do their work with AI. There’s no way around this. If your team doesn’t experience the pain of context management, prompt iteration, and AI-assisted workflows firsthand, they cannot build products that solve those problems for customers. You can’t fake empathy.

The Skeptic Problem

First impressions are important. We cannot fault people for being skeptical of AI if their first impression came from limited AI models like GPT-4o, Microsoft Copilot, or Chinese models like Minimax 2.1 and GLM 4.7.

We can fault people for refusing to experiment and change their mind as things change. We can fault people for joining an Anti-AI tribe and refusing to accept that it’s a learnable skill.

Human Skill Is Critical

Two people can use the same AI to build the same feature and produce dramatically different results. The difference isn’t the tool. It’s the person’s willingness to learn how to orchestrate and delegate to AI, improve their skill at context management, their knowledge of code architecture and code convention opinions, that are critical for guiding coding agents.

I’ve seen people scold teammates for using AI. They frame it as a quality concern, but it’s discomfort with change, status anxiety, or ideological opposition in disguise.

Quality In, Quality Out

Team alignment on quality standards matters more than ever. AI doesn’t magically produce good code. It produces code that reflects the standards of the person directing it.

Developers with low coding skill produce low quality results with AI. They accept code that won’t scale. They have no principles or opinions. Everything is 6 one way, 1/2 a dozen the other. They don’t review carefully. They push code they haven’t validated for edge cases, security, performance issues, observability, exception handling, etc.

Developers with bad code opinions (abstraction and obfuscation artists), clean-code maximalists, people who blindly follow conventions without rationale, also produce terrible code with AI.

Developers with high standards use AI differently. They iterate. They push back on the AI’s suggestions. They review output for alignment with principles, standards, and steer away from traps that cause conflicts, coupling, and technical debt. They validate what they’re shipping.

Good developers use these experiences to build a library of code opinions and principles for the AI, so that it never makes the same mistake twice.

Good teams have alignment on principles, and gives everyone’s AI the same baseline ruleset. Without shared principles, ten developers using AI will produce ten incompatible approaches to the same problem.

AI Alignment - Context Mastery

Aligning everyone’s AI tooling with the team’s code opinions and rules is a new hard requirement.

This means:

- Shared baseline context that every agent session starts with

- Documentation of decisions made and why

- Awareness of implications: “if you change X, it affects Y and Z”

- Code opinions captured in the repo, not just in people’s heads

- Integration tests between systems that allow the AI to identify breaking contracts

- Test coverage as an agent guardrail. You may hate coverage rules or test-driven-development when hand-coding. But for AI agents, it provides immediate proof of work, and prevents regressions from other agents in the future.

Team Culture Imperative

Teams not fully leaning into AI will never develop these practices. They won’t build the guardrails needed for high velocity parallel agent development… or they won’t be universally adopted by the team. This will lead to code conflicts, arguments during PR reviews, frustrations among team members. And worst of all, they won’t learn through experience.

Teams that have members who scold others’ AI use, or who build barriers to using AI will be left behind.

This Is New

Claude Code + Opus 4.5 was the first Agent + Model that gave us a real taste of the AI dominated future. The multi-agent, parallel development workflow is here now, and it’s accelerating fast.

AI Amplifies Productivity AND Problems

10 developers working in parallel can be fraught with merge conflicts and frustration. With alignment on principles and quality, it’s possible to rarely see conflict. This dichotomy is amplified when developers are each capable of managing multiple agents working in parallel.

AI is an amplifier of your organizational culture. It can supercharge a well oiled machine, or amplify existing problems; more conflicts instead of fewer, PRs that require a longer time to review instead of needing barely any review. These are all skill issues, not AI’s fault.

The Three Tiers of Individual Productivity

Not everyone responds to the call for change the same way. These are my three tiers of AI adoption.

Tier 1 - AI-Native

Tier 1 devs run multiple agents in parallel using a git worktrees.

- Branch A’s agent is 12 minutes into a new feature and is building and running integration tests as it goes.

- Branch B’s agent just ran into a dependency issue after 5 minutes of work that requires an architectural decision. The developer feeds it additional context and clarification of needs allowing the agent to choose the best option and continue to completion.

- Branch C’s agent just finished a new feature. The developer reviews the summary, notices some red flags, and asks the agent to perform a security self assessment against the team’s security standards and P0 testing guidelines.

While agents work, the human is reviewing output, providing context, making architecture decisions. The job is orchestration. These people capture a 10X productivity gain.

They got here by painstakingly learning how to harness AI for consistent, high quality outputs.

Tier 2 - Default

Tier 2 devs have Cursor or some other code-assistant. They may have tried Claude Code. They hate the idea of paying for AI. They have a cynical approach to using these things. Instead of improving their vibe coding skill set, they mainly stick to autocomplete, or very basic requests.

They write off LLM agent failures as technological limitations rather than their own skill issues. When an LLM fails, it’s evidence that LLMs aren’t good. They put little effort into learning how to make the LLM do better work for longer periods of time on more complex tasks.

Instead of working on multiple tasks in parallel, they scroll on social media while waiting. Probably because they haven’t learned how to get the agent operate for more than ~1 minute before it needs correction or help.

This developer may have a 0% - 100% productivity gain from AI. They spend less time debugging and reading documentation. But they also get distracted easily. Their AI results require more code review and manual fixes.

Tier 3 - AI Denialist

Tier 3 devs have decided AI isn’t for them, they either deny its usefulness, or feel that AI could never replace them. That may stem from ethical concerns, aesthetic preferences, past bad experiences, or arrogance.

Louis Rosenberg argues that AI denialism is society “collectively entering the first stage of grief” over the possibility that humans may lose cognitive supremacy to artificial systems. It’s not a rational response to evidence. It’s a psychological defense mechanism.

Their social media feeds now serve them AI skepticism content continuously. Their positions harden. They become more confident in their choice as they find others commenting and parroting the same opinions.

They claim that using AI causes loss in critical thinking abilities, that over time it will make you stupid. They use phrases like “AI slop” to dismiss remarkable code, documents, and images produced at the touch of a button. The dismissive language is a tribal marker, a way to signal membership in the skeptic community.

They would read this blog article and claim I am a paid shill, that I MUST have investments in AI and need to sell it to people. That AI is the same as crypto / Web3 scams and has no utility.

These people will be displaced. Some will adapt late. Others won’t adapt at all.

The Customer Doesn’t Care About Your Beliefs

Customers care about time, price, and quality. They compare you to competitors.

“Hand-crafted code” isn’t a purchasing criterion. No customer inspects your git history to verify human authorship. No one asks whether you used AI to draft that contract, write that report, or build that feature. They care whether it works, whether it’s on time, and whether the price is right.

If an AI-assisted competitor ships in 2 weeks what takes you 2 months, you lose.

Organizations that hold back on AI for ideological or cultural reasons will be outcompeted by those that don’t. This is already happening.

For software, legal documents, financial analysis, marketing copy, customer support, the consumer cares about what they get and how they benefit, not the process.

From Programmer to Orchestrator

Programming is a means to building a product. Building requires understanding conventions, principles, and organization. Software developers have moved from programmers and builders to a higher level of abstraction: orchestrators who delegate to agents.

Steve Krenzel’s article AI Is Forcing Us To Write Good Code says: “For decades, we’ve all known what ‘good code’ looks like. Thorough tests. Clear documentation. Small, well-scoped modules. Static typing. These things were always optional, and time pressure usually meant optional got cut. Agents need these optional things though.”

This will hold true even if coding LLMs are 2x better or 10x better than today’s models. What matters now more than ever are team alignment, code opinions, and context mastery.

This is because there are a myriad of ways to approach problem solving, and opinions on how to construct software. The same coding prompt in two different repositories may use totally different approaches to solve the same problem.

Messy code with mixed and confused principles and opinions doesn’t scale. LLMs that are 10x better will still need guardrails and architecture opinions.

When a team of 10 developers are all using LLM agents in a codebase, they need to be working in the same way. The bottleneck is now getting all of the context out of your head and into the LLM.

A developer who can construct the right context for an agent will outperform one who can’t, regardless of their raw coding ability.

Architectural Design Records (ADR) as Context

Documentation is mainly for LLMs now. Your future AI sessions need to understand why decisions were made and what tradeoffs were evaluated. When building complex systems, where a large number of decisions made each had tradeoffs and alternatives, this documentation becomes critical.

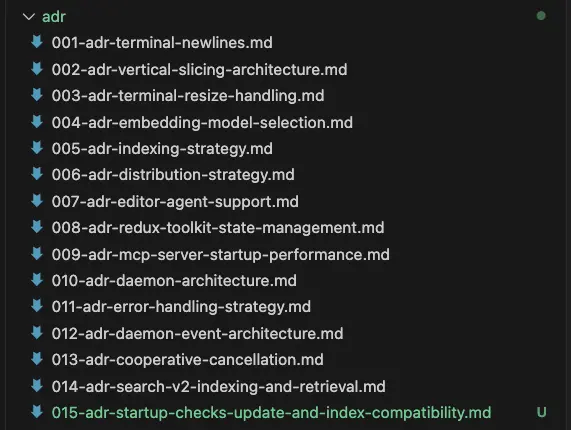

My ADRs for viberag - these are how I keep codex working for a solid hour on new features

LLMs will make recommendations and list pros / cons with limited context. When making an important codebase decision, one tends to spend considerable time filling the agent with context to evaluate tradeoffs, chatting back and forth to deep dive into options. This context won’t be available in future sessions, and must be persisted as documentation for future humans and LLM to use.

Architectural Design Reviews / Records (ADRs) were once considered bureaucratic overhead, but now they are critical snapshots of important decisions in your codebase. They are required to prevent future LLMs from making bad decisions and creating regressions.

Do we really need this feature?

The ADR will give you a resounding answer to this question.

Architecture That Enables Parallel Agent Coding

“You should treat your directory structure and file naming with the same thoughtfulness you’d treat any other interface.” - Krenzel

Meaning:

- Small, well-scoped files: Separation of concerns, decoupling, single responsibility, etc. This improves Agent context management when searching files and folders for relevant code.

- Semantic naming:

./billing/invoices/compute.tscommunicates more than./utils/helpers.ts. Help the AI navigate. - Explicit, not clever: “grug brain” concepts that help humans also help LLMs. Avoiding unneeded abstractions, avoiding “DRY” principles, adding more context and reasoning to docstrings and code comments, etc.

- Types! Typing shrinks the search space of possible actions. If an agent tries to write invalid data, the type system complains clearly.

- Formatting: LLMs thrive from linters and code formatters too. LLMs can have more consistent outputs by being aware of these rules. They will spend less time making conflicting formatting changes from one edit to the next.

Vertical Slice Architecture

More important than ever is avoiding monolithic layer-based architecture. These tend to scatter a feature across domain-based files and folders, and contribute to merge conflicts. An LLM will gladly program extremely abstracted spaghetti with 7 layers of parameter passing if you are not opinionated about architecture.

Don’t: Layer-Based Architecture

layered_app/

├── main.py # Main application entry point

├── api/ # Routes (controllers in MVC terms)

│ ├── documents.py

│ └── users.py

├── services/ # Business logic

│ ├── documents_service.py

│ └── user_service.py

├── repositories/ # Data access logic

│ ├── documents_repository.py

│ └── user_repository.py

├── schemas/ # Pydantic models for data validation and serialization (input/output)

│ ├── documents.py

│ └── user.py

└── models/ # Database models (SQLAlchemy, etc. - *not* Pydantic schemas)

├── documents.py

└── user.py

Vertical slice architecture separates features into their own folders. It’s part of single responsibility principle, a folder only changes for its feature. Dependencies between features are explicit and intentional. You can delete a feature by deleting its folder.

This allows a team of dozens of people, each operating a fleet of coding agents, to be working on features in parallel, without having merge conflicts.

src/

├── documents/ # Vertical Slice

├── routers.py # slice routes

├── service.py # slice business logic

├── repository.py # slice database transaction functions

├── schemas.py # slice Pydantic schemas (route schema / CRUD schema)

└── models.py # slice database model (SQLAlchemy table schema)

├── users/

├── routers.py

├── service.py

├── repository.py

├── schemas.py

└── models.pyStop Coding Like It’s 2022!

If you’re still coding like it’s 2022, you’re NGMI. That was 4 years ago! Older software principles were biased towards abstractions, reduction of duplicate code, and magic box shortcuts that reduce boilerplate (and sometimes) cognitive load. LLMs perform worse in architectures like that.

WET not DRY (YAGNI)

Boilerplate is not an issue with LLMs. It doesn’t take longer for the LLM to write something twice. Leaning into decoupling, single responsibility principle, write everything twice (WET) prevents tangling concerns in your codebase.

Agents (and humans!) struggle to chase references through 5 abstract helper files. DRY is still there when it’s needed to enforce necessary consistency. But LLMs don’t need it, and don’t need many “human shortcut” coding practices. (YAGNI principle)

Opinionated Architecture Is Important for LLMs

This section is not meant to be a comprehensive review of architecture that scales well with teams and agents. But it is an important reminder that problems with your architecture that cause frequent merge conflicts will be exacerbated by the use of LLM agents.

LLMs on their own will not adhere to architecture opinions, and won’t be consistent over time. Your entire team needs to become architects. If you do not understand how code opinions create impact on the codebase and teams at scale, you will have problems using AI to code.

Observability At Scale - Automating Feedback Loops

Telemetry, structured logging, clear audit trails—these become essential infrastructure, not nice-to-haves. LLMs produce bugs and thrive on feedback loops. Instead of manually testing and feeding errors back to LLMs, they can be enabled to test and check this data themselves.

Exception handling practices that obfuscate stack traces, or unexpectedly handle errors in side effects, inhibit an LLM’s ability to detect and solve real problems. If your existing codebase has bad practices like this, the LLMs will mimic those patterns as established precedent.

Agents don’t stop at the codebase. Claude Code is perfectly capable of executing the gcloud cli to navigate through logs. It will connect to Sentry to troubleshoot errors. AI denialists are not even aware of these capabilities.

The Continuous Reinvention Requirement

AI capabilities improve significantly every 6 months. Your processes and assumptions need to evolve at the same rate.

- What required human intervention 6 months ago may not anymore.

- Best practices from January become obsolete patterns by July.

- The guardrails you set up for

gpt-5-codexorOpus 4.5may be unnecessary constraints forgpt-5.2-codexorOpus 5.0. - The limitations you accepted in June are gone by December.

Retrospectives For AI

Retrospectives that used to focus on the performance of team members now focus on what is and isn’t working with AI. The results of retrospectives can translate directly into an AGENTS.md or CLAUDE.md file.

These docs don’t necessarily build over time. Constraints for old models may be limiting or holding back newer models. This requires continuous experimentation.

Multitasking is back!

With legacy programming, the worst thing you can ask a programmer to do is multitask. Coding without AI agents requires focus and flow state. We hold a lot of moving things in our heads while manually coding.

LLMs allow us to remain at a high level. We can declare what’s important in a document (ADR) or planning session with an LLM, and then let it run for 20 minutes to complete the work.

It’s very easy to use git worktrees to spin up another branch on your machine, and assign it another agent task while you wait.

The better your guardrails, ADR docs, and planning sessions are, the longer an agent can work autonomously, and the less time you’ll spend on code reviews.

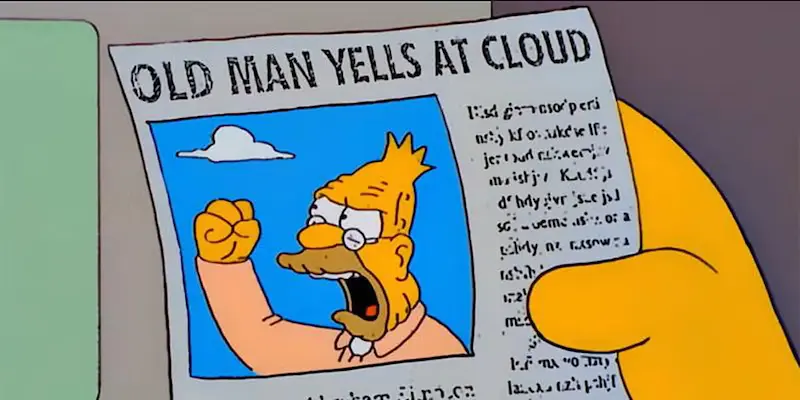

Stages of Multitasking

This is from Steve Yegge’s Gas Town. A prediction of extreme levels of multitasking with agent harnessing. I’m personally at figure 6.

I predict that by the end of 2026, AI-pilled developers will be building software in ways that look totally alien to legacy coders who are still tab-completing 5 line edits with Cursor.

watch this video from Pleometric

how many claude codes do you run at once? gas town? pic.twitter.com/3WjMK2XkQT

— Pleometric (@pleometric) January 15, 2026

A New Approach to PR Reviews

Reviewing code in PRs isn’t just about making comments on problems, or deviations from the team’s conventions. Now it’s about suggesting guardrails and documentation changes so that the AI doesn’t make the same mistakes in the future.

Examples:

- AI using the wrong import format

- AI not following your testing strategy

- AI incorporating too many concerns into a single function

- AI using the wrong docstring format

- AI not aligning with your code organization practices

- AI not complying with your authorization pattern

LLMs can be helpful in the PR review process too. You should run agent PR reviews before involving team members, to reduce their review load.

Continuous Adjustments

Initially, your team will need to do a lot of tedious work to break down PR feedback into new prompt context guardrails. You will also need to walk a fine line between excessive prompting and guardrails, and not enough.

Over time, your team will curate an LLM engineering strategy that reduces the errors and mistakes the LLMs are making, aligning it more with your team’s conventions and style.

A Day in the Life—July 2026

- LLM engineering strategy and guardrails have matured over the course of a few months

- Everyone on the team is using git workflows to manage 2+ branches / features at once

- Coding agents are capable of working 10+ minutes without issues

- Engineers and product are communicating on a daily basis to see how feature ideas and requirements turn into usable prototypes

- More time is spent exploring the problem space, evaluating how things work, thinking strategically about solutions, identifying edge cases, and iterating through prototypes.

- Engineers are learning more about code conventions and architectures that allow LLMs to work on features in parallel with less conflict.

The Claude Code Native Generation Is Coming

They say no one is hiring Junior developers anymore. I predict soon we’ll be hiring mainly juniors.

In May 2026, the first wave of Claude Code native developers will hit the job market.

These are CS graduates and self-taught devs who learned to code with AI from day one. They’ve never known a world where you manually write every line. They have no nostalgia for the craft of hand-coding.

Your cold dead hands are gripping to slow legacy ways of building software. No one cares that you’ve been doing it this way for 20 years. People only care about SHIPPING.

These juniors may understand architecture better than seniors who refuse to use AI.

Why? Because they’re not spending years mastering syntax before they can think about system design. They’re learning patterns, principles, and architecture from the start, because that’s what matters when you’re orchestrating agents. They’re learning the benefits of vertical slice architecture, ADRs, and other Agent parallelization techniques, while their AI handles the implementation details.

A senior developer who refuses to use AI is now competing with a junior who can ship 5x faster and already thinks in terms of context management and agent coordination.

Employers don’t care about seniority. They care about velocity, about shipping product.

Your View On AI Is A Reflection Of Your Mental Health

AI Denialism is cope for a fragile ego, dressed up as principle. It’s grief over losing self-perception of cognitive supremacy. Spouting virtues about AI slop is identity signaling. Your reddit-esque echo chamber gives you validation, not information. Self-selection into communities creates a false worldview as the world you knew crumbles.

Rejecting AI is maladaptive behavior that is not serving you. Your reaction to AI isn’t about AI. It’s about your relationship to your own identity.

- If your identity is “I’m a good programmer,” AI is an existential threat.

- If your identity is “I solve problems and ship product,” AI is a multiplier.

- Denialism protects a brittle ego. Adaptation requires a secure one.

- The people who adapt faster aren’t the smartest, they’re least attached to “being the smart one.”

Let go of “I’m a developer” and become “I’m a product shipper.” Stop “writing code” and start “architecting solutions”.

Denialists see AI as a threat because they see themselves as replaceable. Optimists see AI as tool because they see themselves as builders.

Jack Clark, co-founder of Anthropic, called it:

“By summer 2026, people working with frontier AI will feel like they live in a parallel world to people who aren’t.”