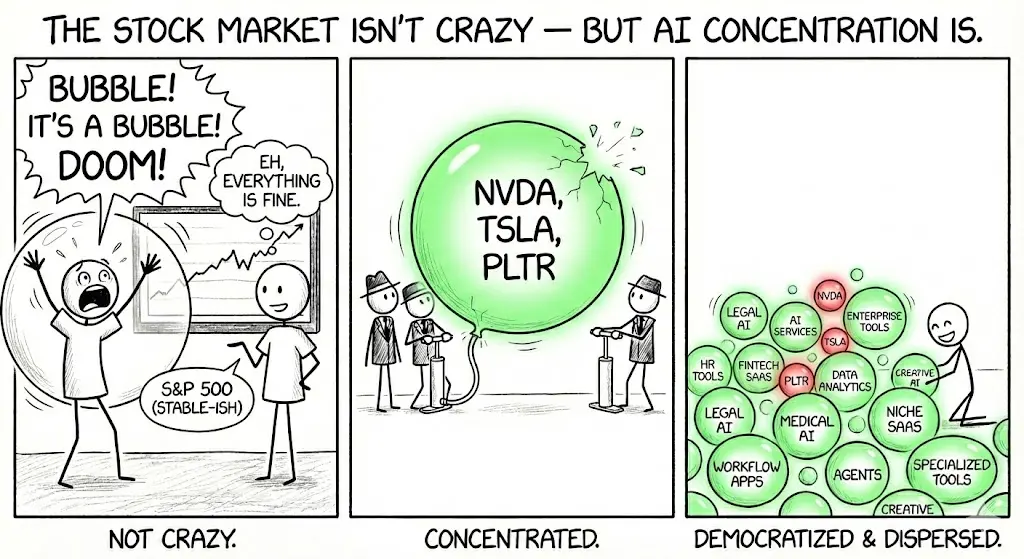

The Stock Market Isn't Crazy — But AI Concentration Is

The Stock Market Isn't Crazy — But AI Concentration Is

I don’t think the stock market is actually crazy right now.

But the concentration of AI investment into a small number of companies is — TSLA, NVDA, PLTR in particular.

Drops of those will flow immediately into a larger number of opportunities with higher return potential. Overall SPY will remain stable.

AI Is Democratizing

AI is democratizing. Switching costs are low. Barriers to entry are surprisingly low. Building an AI monopoly will be much harder than building a search monopoly. There will be no single monolithic AI model dominating the planet.

There will always be opportunities to create specialized AI that is better, faster, and cheaper than monolithic AI in every industry vertical, job, and workflow. The AI arbitrage opportunities here mean that you can do something 10x better, 10x faster, and 10x cheaper than monolithic AI.

I see this personally in the legal industry. State of the art monolithic AI providers (OpenAI, Google, Anthropic) are unusable for rigorous legal analysis, legal drafting, legal research, etc. Their accuracy only gets you 70% of the way there.

The AI value layer comes from SaaS companies that optimize AI models, AI workflows, AI agents, and AI user experiences around targeted use cases — that take their usability from 70% to 95%.

It does not make sense for a frontier provider to optimize all of these things for an incremental TAM of $1B/yr in revenue. It doesn’t support their valuation or business models. Rather, they fight and compete to be the AI provider selected by the niche industry value layer.

Even if frontier model providers make incremental gains in Legal AI (or any industry’s) competency — there will still be opportunities to build that value layer.

Upcoming Outflows

- Over-invested large players who are in a race to the bottom.

- Frontier AI service providers with high competition and limited ability to differentiate (incremental profit over cost of infra and electricity) - it’s a resource efficiency game.

- Companies with cultures that refuse to adopt AI or leverage it to maintain competitiveness or productivity.

Opportunities: Cultural Market Turnover

The opportunities we have today are similar to what we have always had. Companies that refuse to adopt modern innovative practices (AI) will lose business to those that do. The reason this happens is mainly cultural. A lot of companies are very slow to change, and it’s hard to steer a Titanic. It requires firing friends, breaking up political factions, slaughtering sacred cows, and layoffs of people slowing down the change.

We all know people who refuse to use AI as a matter of principle:

- The developer who simply refuses to use it from ignorance or arrogance.

- A tech lead who discourages AI use on the team because of sloppy PRs — creating a culture of fear around it.

- A nontechnical C-Suite who saw quality metrics drop when GPT 3.5 was adopted and made it a policy to not use it.

- The VP who sees AI cannibalizing their business and ruining their KPIs and selfishly crushes innovation.

Companies that fall behind will quickly find that their dwindling revenues no longer support their business structure, and they won’t be able to change fast enough to continue as a going concern.

This will happen at every layer of the market, from IBM-sized professional services companies, to manufacturing, to your local 5-person web shop.

The S&P 500 Will Keep Rotating

The overall S&P 500 should continue to see ~10% annualized gains. But non-AI companies will rotate out for new AI-enabled players.

Even in 2025 — the year we see massive increases in AI model competence and companies like NVDA reaching $5T values — the S&P 500 has remained stable with a modest ~12% return. They quietly rotate underperforming legacy companies for those that are leveraging technology and AI to gain market share in the new economy.

The dying legacy companies incapable of change shrink and rotate out of the mix.

The S&P 500 has rotated 11 companies already this year. Selloff in NVDA, TSLA, and PLTR will start as realization that their upside potential is limited, and there are more opportunities being created for greater returns outside the Magnificent 7. This sell off is likely to flow directly into other S&P 500 companies showing massive gains in profitability by out-competing legacy companies, primed for their own 5x, 10x return opportunities.

Doomers: The Market Isn’t Broken

Concentration corrects.

- The overall market is in good shape.

- The real opportunity isn’t in betting on which frontier model wins. It’s in the long tail — the thousands of industry-specific, workflow-specific, job-specific AI applications that turn 70% use-case-specific accuracy into 95%. That value layer is wide open.

- NVDA’s dominance is temporary, with competetive chips from Google, Amazon, and Intel now hitting production, stealing global fab manufacturing capacity from NVDA, along with its room for profits.

- The size of the AI investment pie will continue to increase, but concentration in the market will disperse into a larger number of companies.

- It’s unlikely we’ll see a Google-Search style monopoly because provider switching costs are so low, and the gap between frontier labs is so small.

- Frontier AI will continue to get better, faster, and cheaper.

- The value layer is primed with opportunity for companies willing to move fast.

This isn’t an impending market crash. It’s a market maturing.

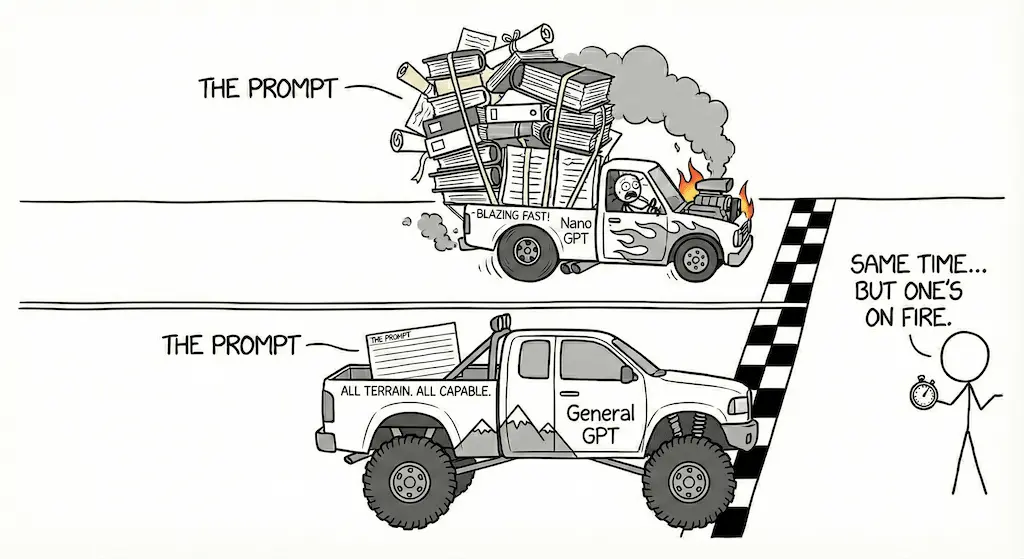

The Prompt Engineering Tax: Why Ultra-Fast Small LLMs Can Be Slower and Costlier in Practice

The Prompt Engineering Tax: Why Ultra-Fast Small LLMs Can Be Slower and Costlier in Practice